Organizing Committee

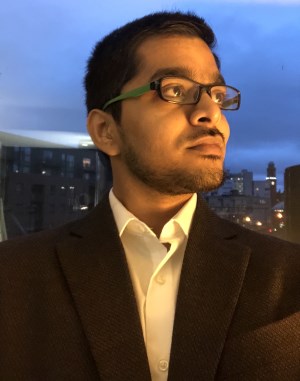

Raj Parihar open_in_new

Dr. Raj Parihar is an AI infrastructure architect and deep-tech founder with 15+ years of experience across silicon architecture, large-scale AI systems, and data-center intelligence. He is Co-Founder and Chief Development Officer of Runara.ai, where he leads closed-loop telemetry and orchestration systems for optimizing AI inference efficiency, and Founder and CEO of AllyIn.ai, building a trusted intelligence layer for AI data centers and energy infrastructure. Previously, he was an ML ASIC Architect at Meta, leading performance modeling and telemetry for MTIA inference chips, and held senior architecture roles at d-Matrix, Microsoft, Cadence (Tensilica), and Imagination Technologies (MIPS). He is an ACM ISMM Best Paper Award recipient, author of 8+ top-tier architecture publications, Co-Founder of the EMC² Workshop Series, and holds a PhD and MS from the University of Rochester and a BE from BITS Pilani.

Satyam Srivastava open_in_new

Dr. Satyam Srivastava is the Chief AI Software Architect at d-Matrix Corporation. He works on building the software stack for new AI accelerators. In his prior role at Intel he worked on enabling machine learning and media systems on Intel compute architectures. His interests include machine learning, visual computing, and compute accelerators. Dr. Srivastava obtained his Doctorate degree from Purdue university (West Lafayette, IN) and Bachelor’s degree from Birla Institute of Technology and Science, Pilani (India).

Ananya Pareek open_in_new

Ananya Pareek is an ML Performance and Co-design Engineer at Google. Currently working on optimizing hardware platforms from a performance-power tradeoff perspective. Previously he has worked at Apple on System Performance Architecture and Modeling and Samsung on GPU Shader and CPU core pipeline and ISA extensions for enabling faster ML/Graphics computations. His interests are in developing hardware platforms for ML/Deep Learning, HW/SW codesign, and modeling systems for optimization. He received his M.S. degree from the University of Rochester, NY, and B.Tech. from Indian Institute of Technology Kanpur, India.

Sushant Kondguli open_in_new

Dr. Sushant Kondguli is a Graphics Architect at Meta Reality Labs Research where his research focuses on low power architectures for on-head rendering devices. Prior to that, Dr. Kondguli was a Mobile GPU architect at the Advanced Computing Lab of Samsung where he helped develop the XClipse GPU architecture used in Samsung’s flagship galaxy smartphones. He received in PhD and B.Tech. degrees from University of Rochester and IIT Kharagpur, respectively.

Ravi Bhuchara open_in_new

Ravi Bhuchara is a Sr. Software Engineer at Microsoft. He holds a MS in EECS and brings two decades of experience in kernel mode driver development and brings deep expertise in low-level system architecture to the accelerator landscape. He specializes in building the foundational hardware abstraction that power the next generation high-speed data processing

Prashant Nair open_in_new

Dr. Prashant J. Nair is an Associate Professor at the University of British Columbia and a Senior Principal Engineer at d-matrix Inc. His research spans computer architecture and memory systems for AI/ML, including large language models, recommender systems, and diffusion models. Dr. Nair’s key recognitions include the 2024 TCCA Young Architect Award (the highest early-career award in computer architecture), the 2025 DSN Test of Time (ToT) Award, the HPCA 2023 Best Paper Award, a MICRO 2024 Best Paper nomination, and the HPCA 2025 Distinguished Artifact Award. Over the past decade, Dr. Nair has published over 40 top-tier papers. Before he was promoted to Associate Professor, as an Assistant Professor he was listed in all three premier Halls of Fame in computer architecture, namely ISCA, MICRO, and HPCA.